|

Hi! I am a postdoc research fellow at the National University of Singapore, where I am a member of the NExT++ Lab, working with Prof. Chua Tat-Seng. I finished my Ph.D. program and received the Doctoral degree in Engineering from the Knowledge Engineering Group (KEG) at Tsinghua, supervised by Prof. Jie Tang. My research focuses on LLM self-training, reasoning, agent, and data mining. I was a visiting student researcher at Caltech in the Computing + Mathematical Sciences (CMS) Department, hosted by Prof. Yisong Yue. I recieved my Master’s Degree from School of Software, Tsinghua University in 2021, advised by Prof. Ping Luo. PS: We are actively looking for self-motivated Ph.D., M.S., and undergraduate students to collaborate with Prof. Chua Tat-Seng and myself through CSC, RA, visiting positions, or remote internships in LLM/VLM reasoning, coding, LLM agents, safety, and LLM/VLM RL. If you are interested, please feel free to send your CV to zd18@tsinghua.org.cn or zhangdan25@nus.edu.sg. All CVs will be carefully treated! 张丹 / zd18 [MASK] tsinghua [MASK] org [MASK] cn / Bio / Google Scholar / GitHub |

|

|

|

|

|

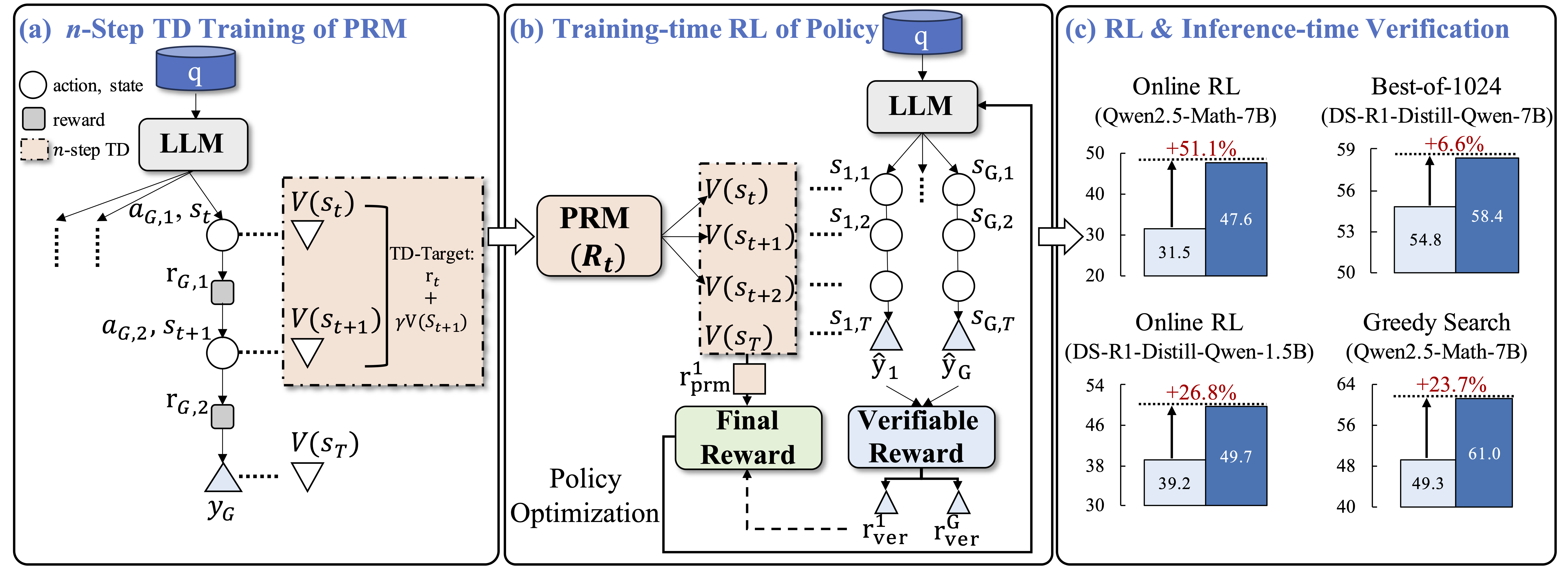

Dan Zhang*, Min Cai*, Jonathan Light, Ziniu Hu, Yisong Yue, and Jie Tang arXiv, 2025 arXiv / code / TD data / Policy Model / Reward Model TDRM is a method for learning smoother and more reliable reward models by minimizing temporal differences (TD) for training-time reinforcement learning and inference-time verification. When combined with Reinforcement Learning with Verifiable Rewards (RLVR), TD-trained PRMs lead to more data-efficient RL and yield higher-quality language model policies in 8 model variants (5 series). |

|

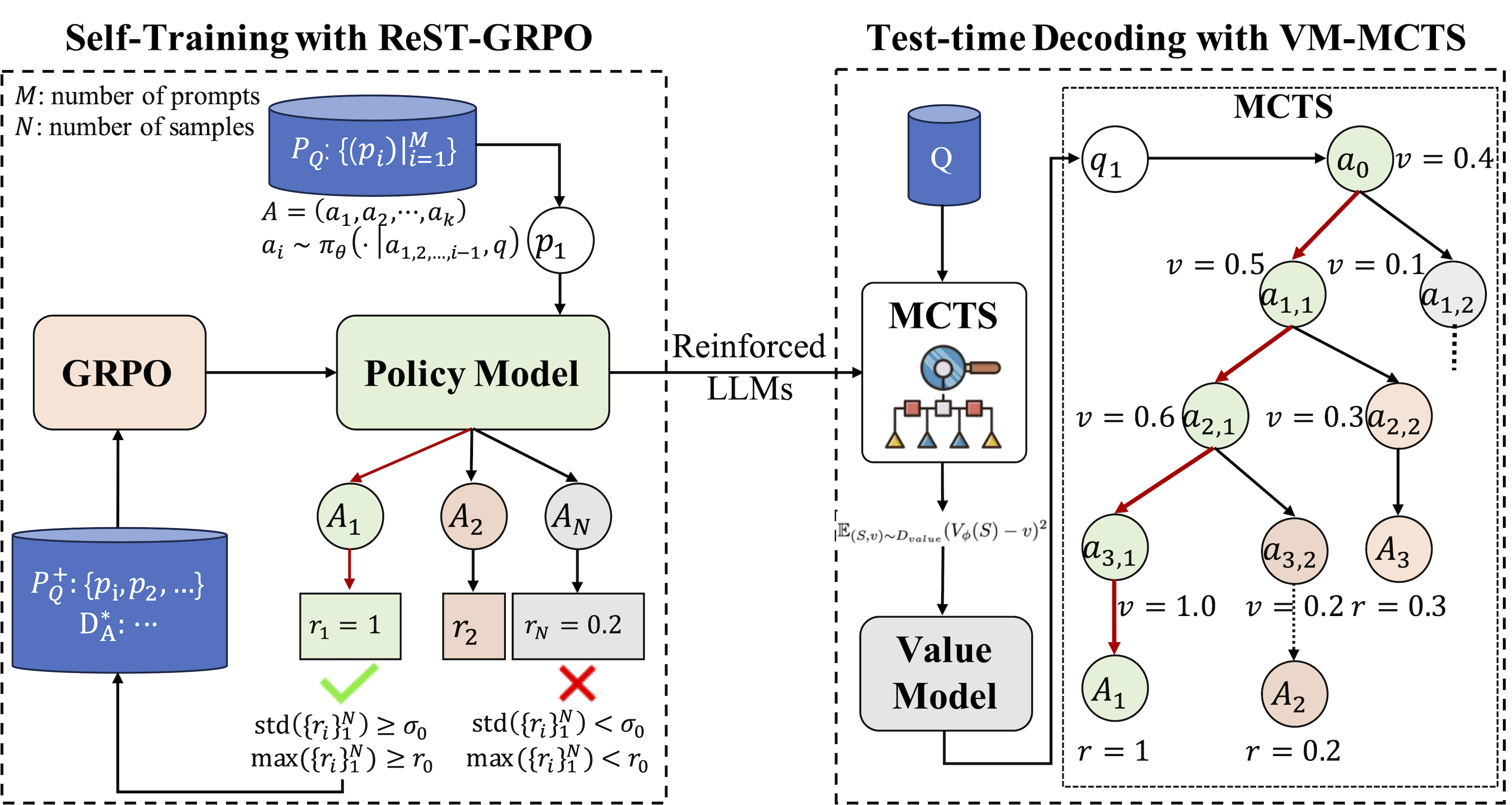

Sining Zhoubian, Dan Zhang, and Jie Tang arXiv, 2025 arXiv / code ReST-RL implements a two-stage reinforcement learning pipeline for large language models (LLMs). Stage 1 — Self-Training (Policy Improvement via ReST-GRPO): Sample on program synthesis datasets, process the generated completions into compatible data, and train the policy with a optimized group-relative policy optimization routine. Stage 2 — Value Model Training and Assisted Decoding with VM-MCTS: Collect reward signals with MCTS-based sampling, process them into reward data, and train a Value Model. |

|

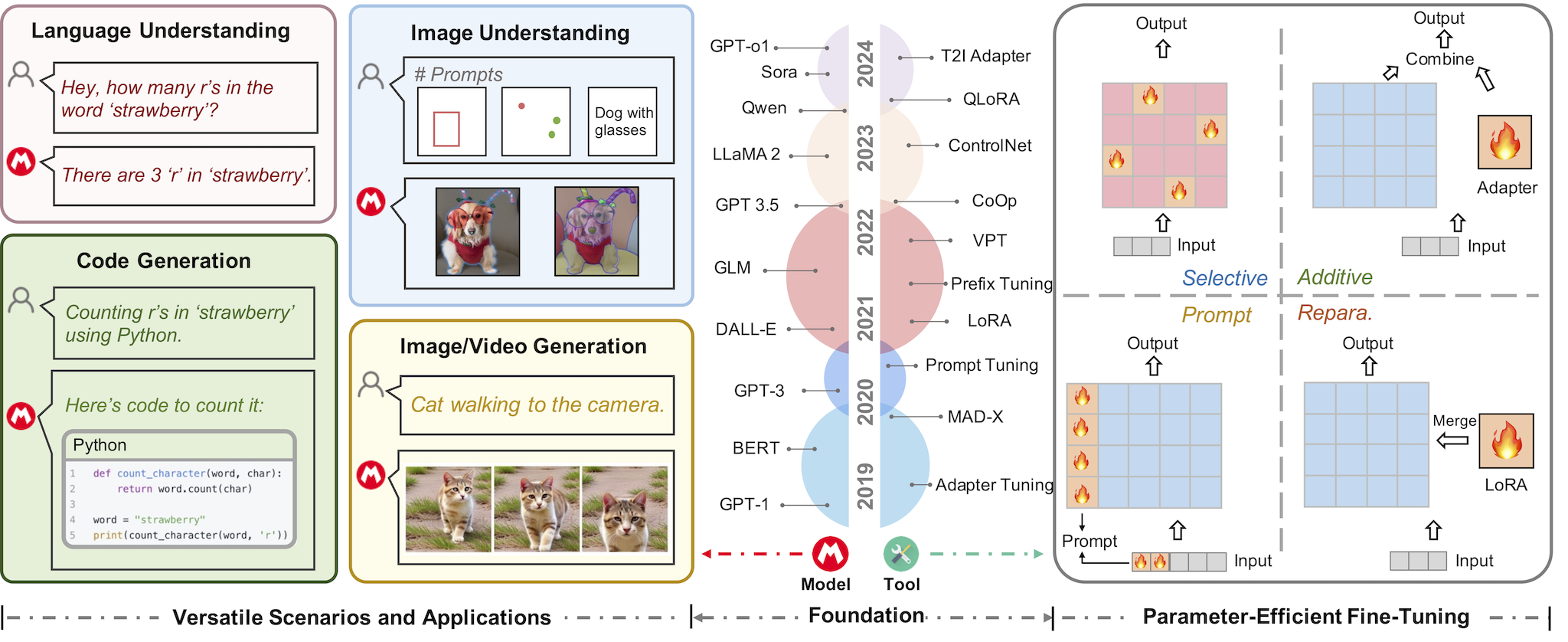

Dan Zhang*, Tao Feng*, Lilong Xue*, Yuandong Wang, Yuxiao Dong, and Jie Tang arXiv, 2025 arXiv / paper list / homepage This survey aims to provide a comprehensive overview of PEFT techniques applied to diverse FMs and address critical gaps in understanding the techniques, trends, and applications. This survey provides a valuable resource for both newcomers and experts seeking to understand and use the power of PEFT across FMs. |

|

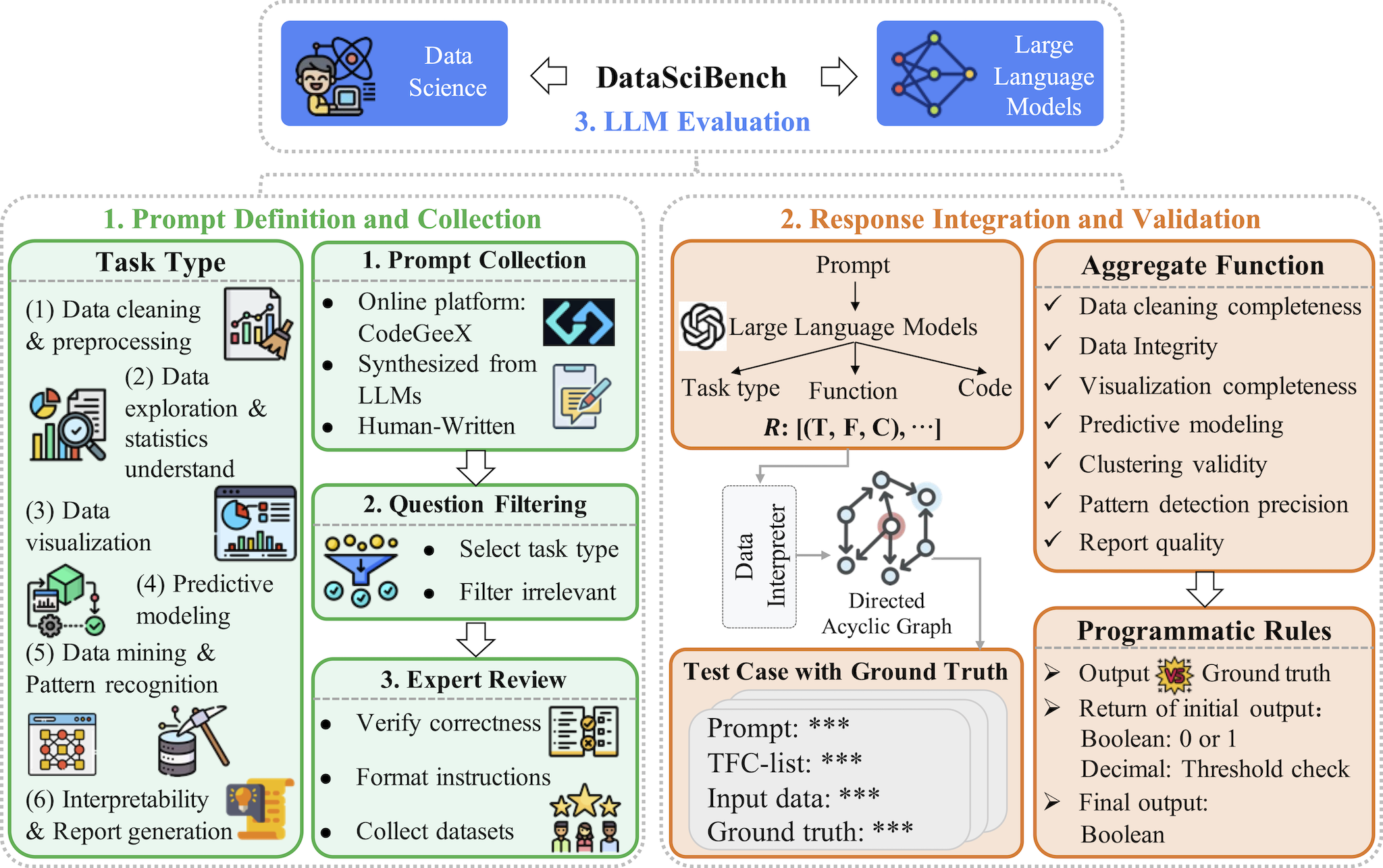

Dan Zhang, Sining Zhoubian, Min Cai, Fengzu Li, Lekang Yang, Wei Wang, Tianjiao Dong, Ziniu Hu, Jie Tang, and Yisong Yue arXiv, 2025 arXiv / code / data DataSciBench is a comprehensive benchmark for evaluating Large Language Model (LLM) capabilities in data science. This paper proposes an innovative Task - Function - Code (TFC) framework to assess each code execution outcome based on precisely defined metrics and programmatic rules. |

|

Dan Zhang*, Sining Zhoubian*, Ziniu Hu*, Yisong Yue, Yuxiao Dong, and Jie Tang NeurIPS, 2024 arXiv / code / model / policy data / PRM data ReST-MCTS* is a reinforced self-training approach, based on integrating process reward guidance with tree search MCTS* for collecting higher-quality reasoning traces as well as per-step value to train policy and reward models. |

|

Xiao Xia*, Dan Zhang*, Zibo Liao, Zhenyu Hou, Tianrui Sun, Jing Li, Ling Fu, and Yuxiao Dong ACL, 2025 arXiv / code SceneGenAgent is an LLM-based agent for generating industrial scenes through C# code, aimed at meeting the demand for precise measurements and positioning in industrial scene generation. SceneGenAgent ensures precise layout planning through a structured and calculable format, layout verification, and iterative refinement to meet the quantitative requirements of industrial scenarios. |

|

Yifan Xu*, Xiao Liu*, Xueqiao Sun, Siyi Cheng, Hao Yu, Hanyu Lai, Shudan Zhang, Dan Zhang, Jie Tang, and Yuxiao Dong ACL, 2025 arXiv / code AndroidLabis a systematic Android agent framework that includes an operation environment with different modalities, action space, and a reproducible benchmark. |

|

Mengyang Sun, Yihao Wang, Tao Feng, Dan Zhang, Yifan Zhu, and Jie Tang ICML, 2025 arXiv / code gRSGD/gRAdamW introduces a novel training strategy for MoE-LoRA to stabilize and enhance the feature learning process through the use of multi-space projections. |

|

Tao Feng, Wei Li, Didi Zhu, Hangjie Yuan, Wendi Zheng, Dan Zhang, and Jie Tang ICML, 2025 arXiv / website We find that forward passes alone are enough to overcome forgetting and introduce the benchmark ZeroFlow to validate this. |

|

Jiale Cheng*, Xiao Liu*, Cunxiang Wang, Xiaotao Gu, Yida Lu, Dan Zhang, Yuxiao Dong, Jie Tang, Hongning Wang, and Minlie Huang ICLR, 2025 arXiv / code / data SPaR is a self-play framework integrating tree-search self-refinement to yield valid and comparable preference pairs free from distractions. By playing against itself, an LLM employs a tree-search strategy to refine its previous responses with respect to the instruction while minimizing unnecessary variations. |

|

Ming Zhou, Dan Zhang, Yuandong Wang, Yangliao Geng, Yuxiao Dong, and Jie Tang TKDE, 2025 arXiv LGB is a social bot detection framework that consists of LM and GNN. LGB fuses the information from textual and graph modalities to improve the detection performance of sparsely linked nodes. |

|

Dan Zhang, Ziniu Hu, Sining Zhoubian, Zhengxiao Du, Kaiyu Yang, Zihan Wang, Yisong Yue, Yuxiao Dong, and Jie Tang NeurIPS Datasets and Benchmarks Track, 2024 arXiv / code & data / model SciGLM is a suite of scientific language models able to conduct college-level scientific reasoning. Central to our approach is a novel self-reflective instruction annotation framework to address the data scarcity challenge in the science domain. Applying this framework, we curated SciInstruct, a diverse and high-quality dataset encompassing physics, chemistry, math, and formal proofs. |

|

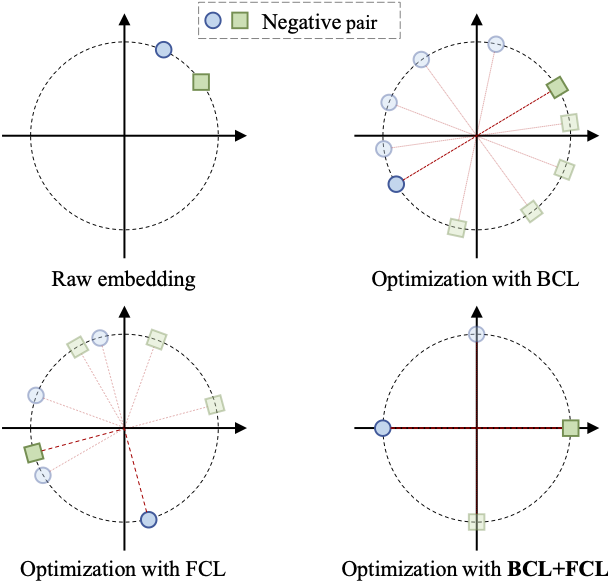

Dan Zhang, Yangliao Geng, Wenwen Gong, Zhongang Qi, Zhiyu Chen, Xing Tang, Ying Shan, Yuxiao Dong, and Jie Tang WWW, 2024, Oral arXiv / code & data / slides_pdf RecDCL is a dual contrastive learning recommendation framework. In this work, we investigate how to employ both batch-wise CL (BCL) and feature-wise CL (FCL) for recommendation. We theoretically analyze the relation between BCL and FCL, and find that combining BCL and FCL helps eliminate redundant solutions but never misses an optimal solution. |

|

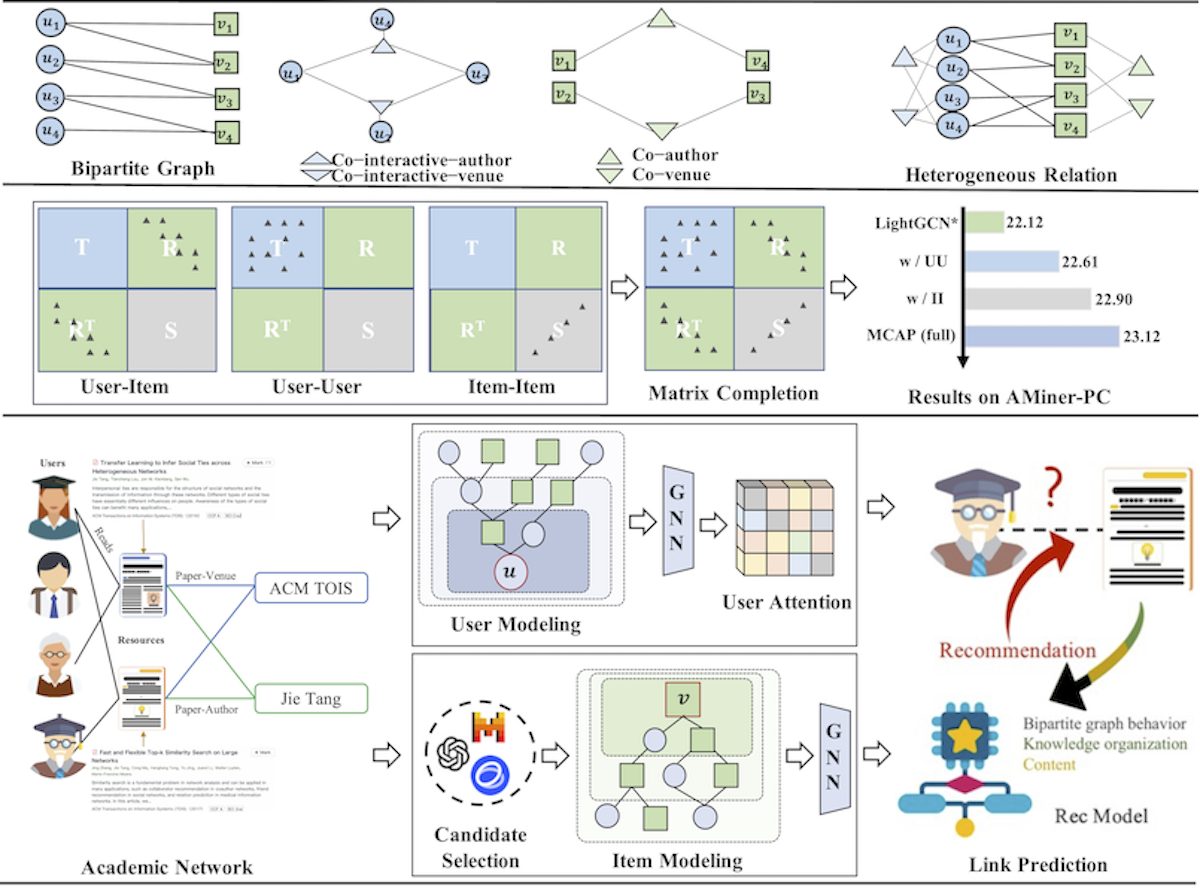

Dan Zhang, Shaojie Zheng, Yifan Zhu, Huihui Yuan, Jibing Gong, and Jie Tang TOIS, 2024 PDF / code & data MCAP uses relation-aware GNNs and executes low-pass propagation with matrix completion to enhance academic paper recommendations. |

|

Lilong Xue*, Dan Zhang*, Yuxiao Dong, and Jie Tang ACL SDT, 2024 arXiv / code / platform AutoRE is an end-to-end DocRE model that adopts a novel RE extraction paradigm named RHF (Relation-Head-Facts). AutoRE does not rely on the assumption of known relation options, making it more reflective of real-world scenarios. |

|

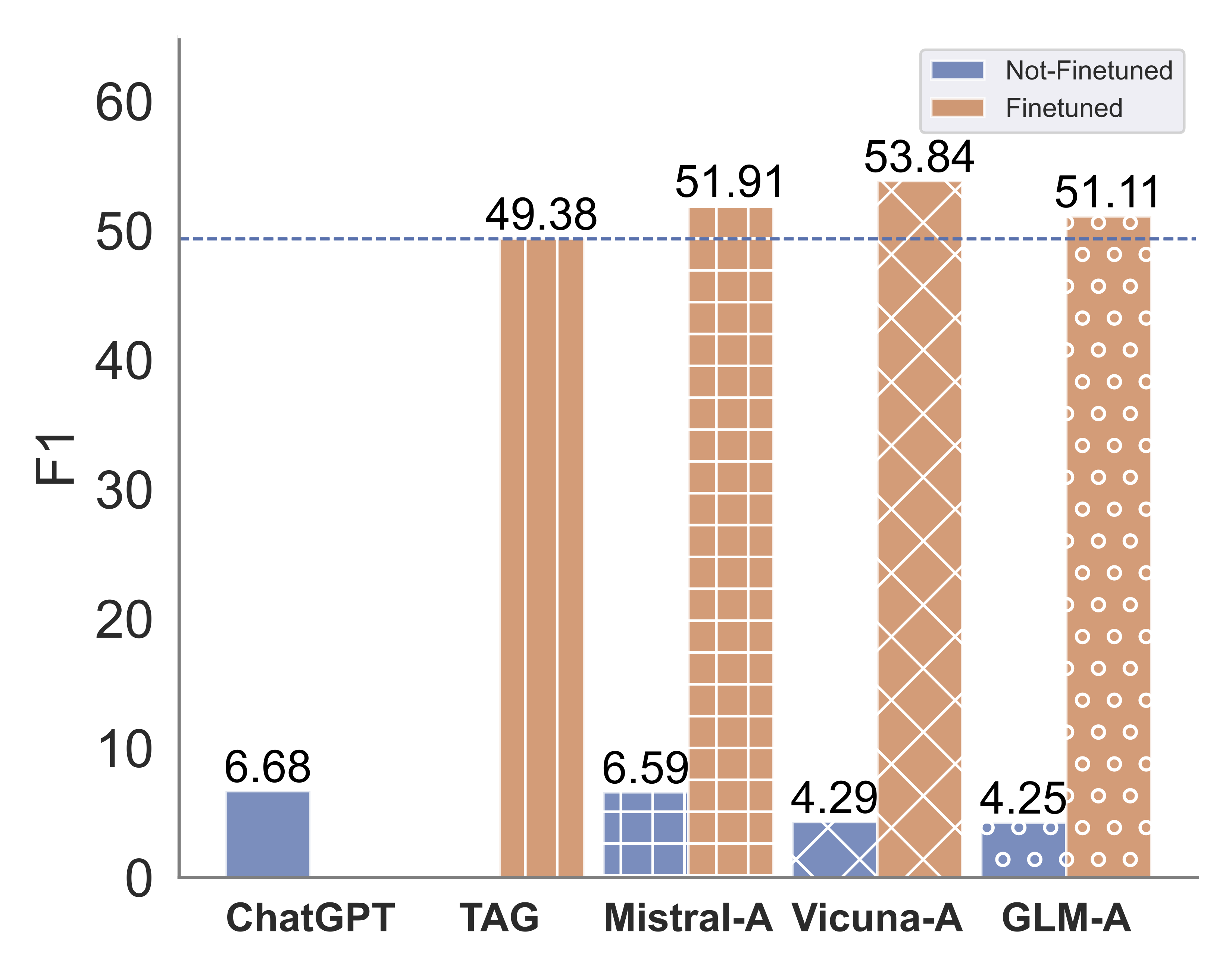

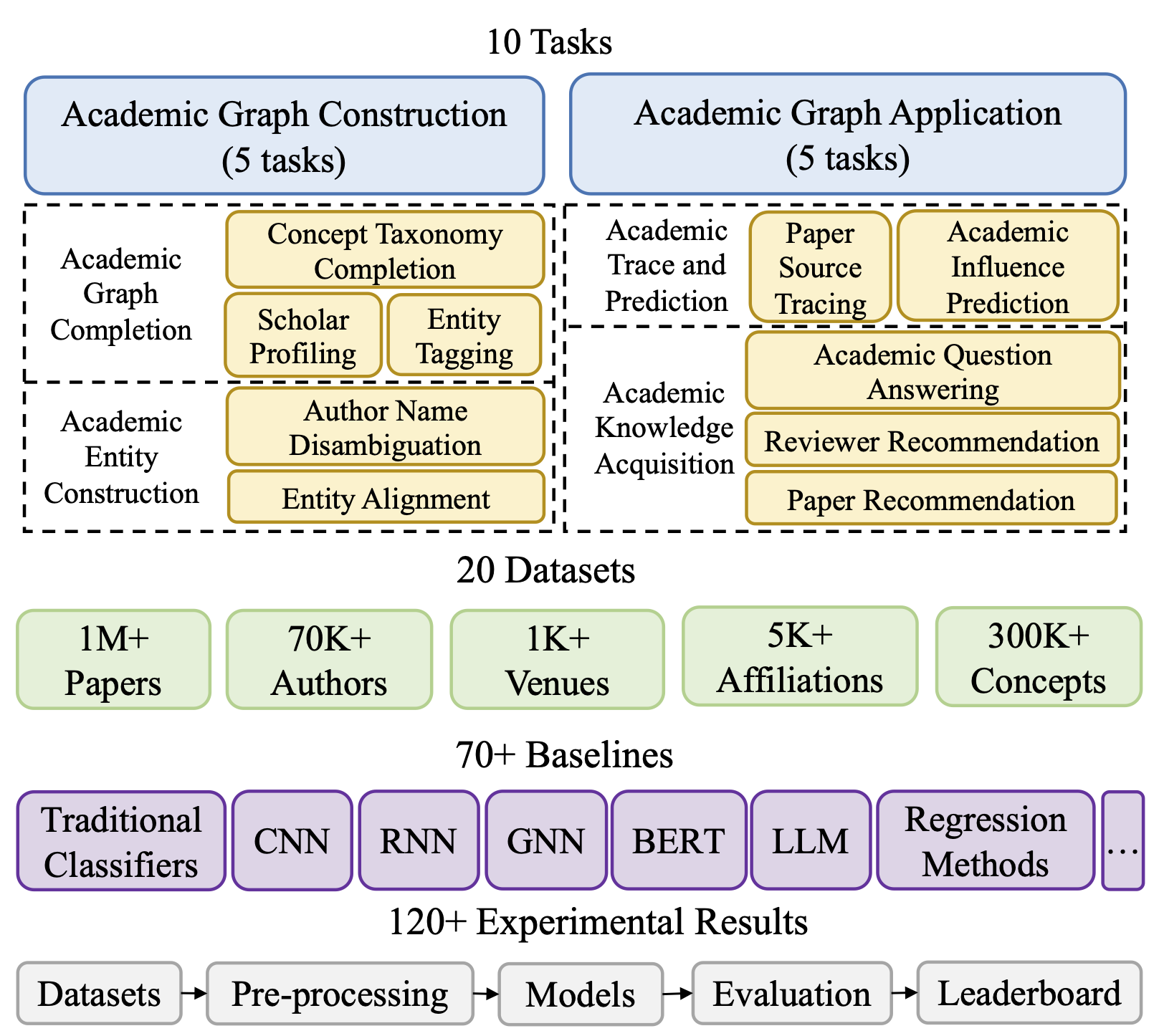

Fanjin Zhang, Shijie Shi, Yifan Zhu, Bo Chen, Yukuo Cen, Jifan Yu, Yelin Chen, Lulu Wang, Qingfei Zhao, Yuqing Cheng, Tianyi Han, Yuwei An, Dan Zhang, Weng Lam Tam, Kun Cao, Yunhe Pang, Xinyu Guan, Huihui Yuan, Jian Song, Xiaoyan Li, Yuxiao Dong, and Jie Tang KDD, 2024 arXiv / code & data / OAG-Challenge @ KDD Cup 2024 We present OAG-Bench, a comprehensive, multi-aspect, and fine-grained human-curated benchmark based on the Open Academic Graph (OAG). OAG-Bench covers 10 tasks, 20 datasets, 70+ baselines, and 120+ experimental results to date. |

|

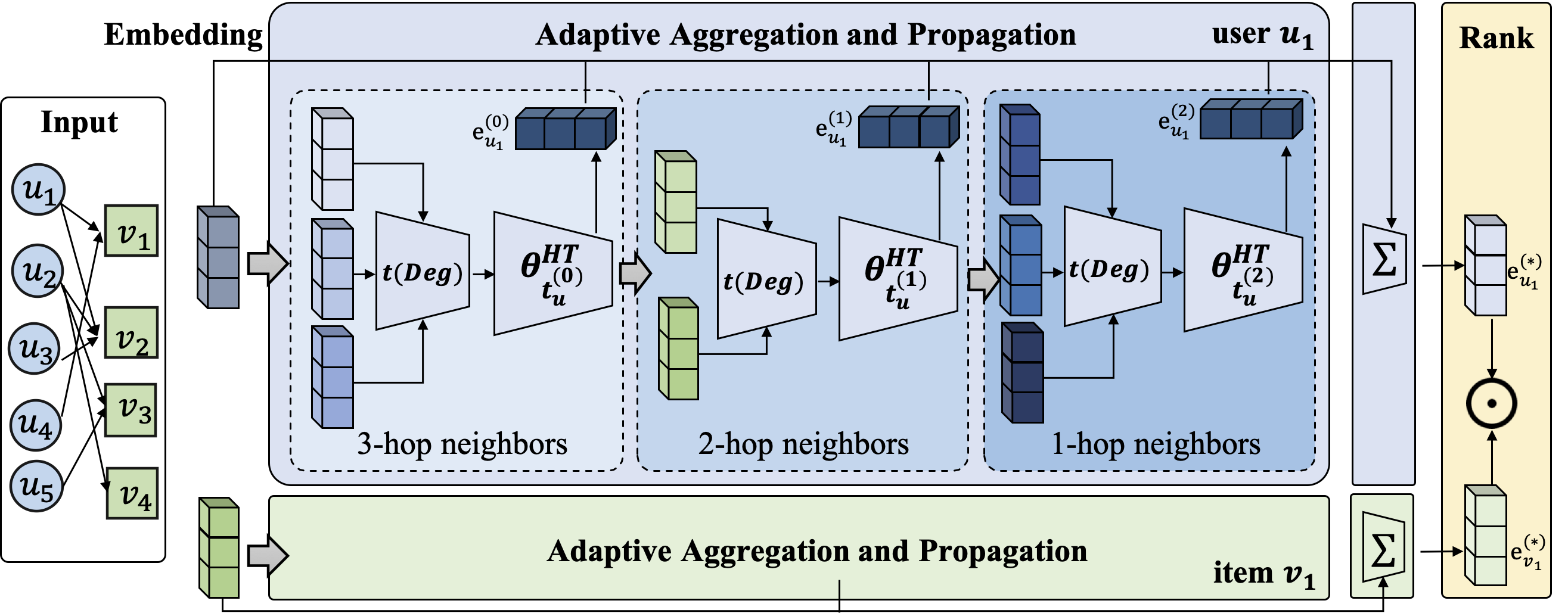

Dan Zhang, Yifan Zhu, Yuxiao Dong, Yuandong Wang, Wenzheng Feng, Evgeny Kharlamov, and Jie Tang WWW, 2023 PDF / code & data / slides_pdf ApeGNN develops a node-wise adaptive diffusion mechanism for information aggregation, in which each node is enabled to adaptively decide its diffusion weights based on the local structure (e.g., degree). |

|

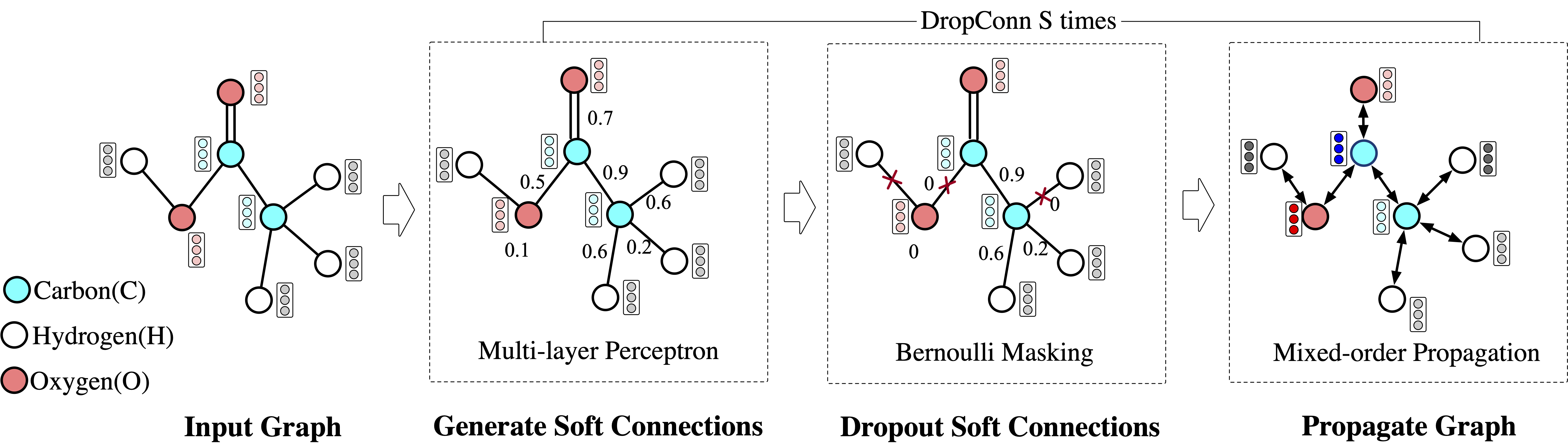

Dan Zhang, Wenzheng Feng, Yuandong Wang, Zhongang Qi, Ying Shan, and Jie Tang TKDE, 2023 PDF / code & data DropConn is an adaptive data augmentation strategy, which better leverages edge features and assigns weights for chemical bonds to emphasize their importance, and generates robust representations for molecule graph property prediction. |

|

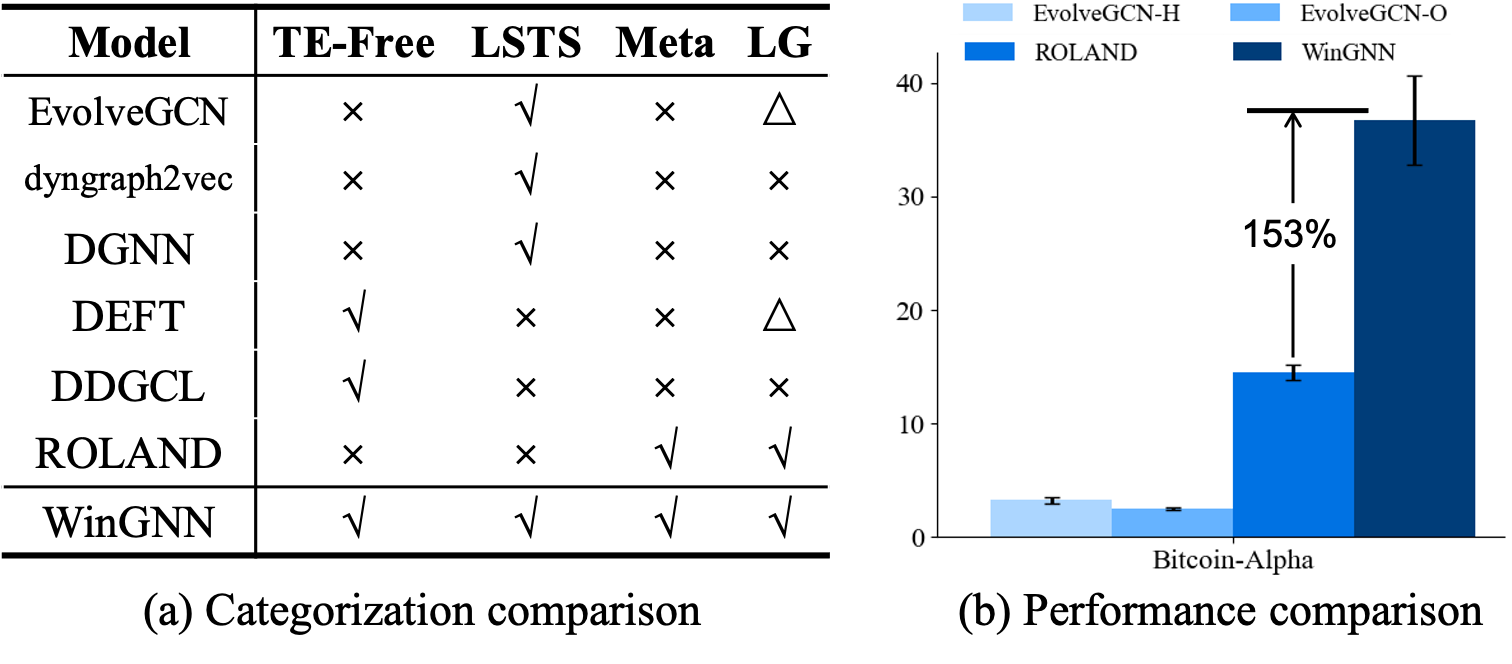

Yifan Zhu, Fangpeng Cong, Dan Zhang, Wenwen Gong, Qika Lin, Wenzheng Feng, Yuxiao Dong, and Jie Tang KDD, 2023 PDF / code & data WinGNN models dynamic graphs by combining a simple graph neural network model with meta-learning strategies and implementing a time-encoder-free dynamic graph coding process through a stochastic gradient aggregation mechanism. |

|

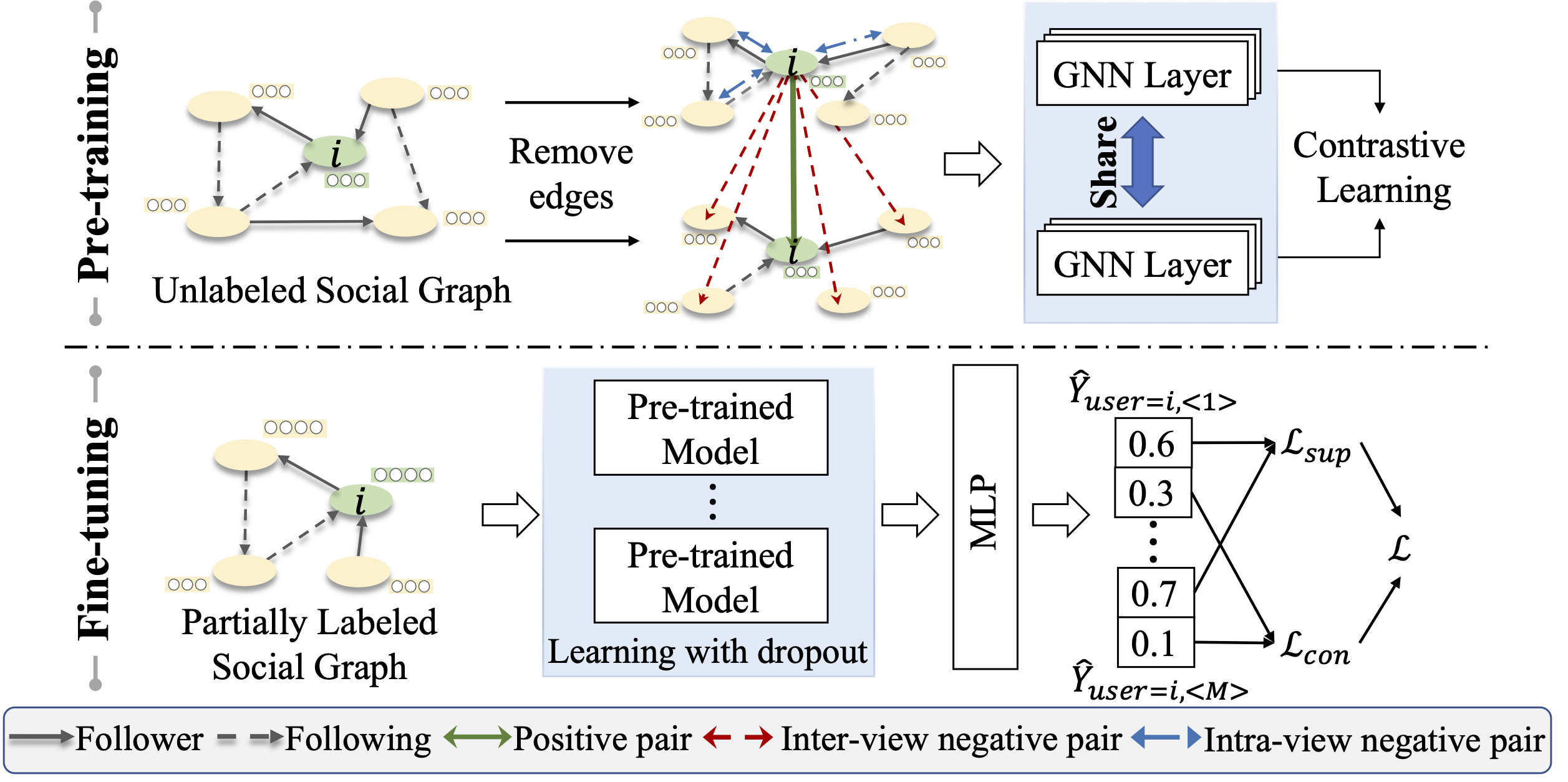

Ming Zhou, Dan Zhang, Yuandong Wang, Yangliao Geng, and Jie Tang CIKM, 2023 CBD is characterized by a two-stage model learning strategy: a contrastive pre-training stage to mine generalization patterns from massive unlabeled social graphs, followed by a semi-supervised fine-tuning stage to model task-specific knowledge latent in social graphs with a few annotations. |

|

Ming Zhou, Wenzheng Feng, Yifan Zhu, Dan Zhang, Yuxiao Dong, and Jie Tang ECML-PKDD, 2023, Best Student Paper We analyze human-bot networks and propose SIRAN, which combines relation attention with initial residual connection to reduce and prevent the noise aggregated from neighbors to improve the capability of distinguishing different kinds of nodes on social graphs with heterophily. Then we use a consistency loss to boost the detection performance of the model for limited annotated data. |

|

|

|

|

Website template credit: Jon Barron |